MySQL 8.x Upgrade

Where does one begin to describe a project that took months for something that is supposed to be simple? It's a journey that ensured excitement, challenges, gnashing of teeth and certainly tested one's perseverance.

Hierdie plasing is ook in Afrikaans beskikbaar :)

A year's work completed

Well, mostly. This was truly a thriller of a project and I did not think it's ever going to take that long. Sometime in April 2023, things changed in the team and suddenly I found myself in the position to perform a task with knowledge needed in an area that was never one of my strong points. In my studies after school, the database module was the only one I had to repeat. Here I found myself thrown in at the deep end in April 2023 to fulfil a database administrator's tasks.

It's been a grueling process, but there's no going back now. On April 11, 2024 the summit was reached and the client's data started running on the MySQL 8.0.36 primary instances. The proverbial Rubicon has been crossed.

There is just too much to put into a post, I could almost write a book! The idea was simple. Upgrade a MySQL 5.7 database to MySQL 8.0.x. The missing details are all around the dependent components of the platform's ecosystem, as well as how long the platform has been running, and how much maintenance was left out. Also how well or up to date the documentation has been kept.

MySQL 8.0

The first development version of MySQL 8.0.0 was released in 2016 (2016-09-12) and the first generally available version, 8.0.11, in 2018 (2018-04-19). The latest stable version supported by AWS is 8.0.36. So there was a lot of development going on behind the scenes.

I.e. the catch-up effort did not begin until five years later, and ended up taking a year. There will always be finger pointing to say this or that is the reason for the process being more difficult or that it would be easier if platform documentation was kept up to date, meaning all the obstacles would be less of a problem. But anyway, it had to happen and we set off with great determination.

Soon such a project will have to be undertaken again, in 2026, according to the Oracle MySQL Blog.

Exploration of the jungle

Initially, the biggest challenge was to find out what exactly is going on in the whole database. Schemas, tables, entity relations, character and collation sets.

With time, new columns were added, new tables and relations, and the discipline to ensure that consistent usage was applied was missing. Certainly ignorance and a lack of experience also has a role to play, especially as a person thrown in at the deep end to update something that has been touched by persons who have moved on a long time ago. Who can you ask if what you want to do is right or wrong? Especially if Internet searches leave you in situations where you seem to be the only one that has a specific problem!

In the case of the technology stack there is a long story involving several languages. It seems that in the beginning Microsoft .Net was used for the platform. Later on some of the services moved to PHP but parts remained behind (PHP to .Net calls required). Later again, parts moved from PHP to NodeJS (TypeScript transpiled to JavaScript), where parts remained behind again. Some API calls pass through four stacks for one piece of functionality! Some processes communicate directly to the database, either from NodeJS or from PHP. Transitions were made over time without consolidating, which makes it complex to find all the corner cases. The point is that all these different components communicate with the same database in their unique ways, and can never break.

There is heavy reliance on the internal capabilities of MySQL, such as triggers, functions and stored procedures. The knowledge and wisdom of the initial architects disappeared as time passed and it was not recorded in any traceable form. This means that the whole upgrade process was undertaken with a lot of effort and a high level of uncertainty.

During my career I have learned that databases are best when used to merely store data in an efficient manner. Processing and reporting then takes place by means of processing the data elsewhere, outside the database. Offloading heavy operations to be concluded elsewhere ensures that CPU cycles are not wasted when access to the data is first priority. It does not mean that such functionality should not be used at all, but it should not be abused. At scale, all performance issues and inefficient queries are amplified and causes issues with reliable service. (Let's be honest, SQL is simple when kept simple, but some scenarios reach levels of difficulty where not even the author can follow what it's supposed to do when trying to decipher it after a while when trying to optimise...) If a result can not be produced within an immutable limited time, and you keep running into that limit, it is a sign that alternative workflows must be followed - not everything can always be completed in a brief amount of time.

The downside of embedding business logic into the database engine, far away from the rest of the codebase, is that much knowledge of the data domain requires separate inspection and expertise in the language of the database engine. I reckon any software developer or architect would be able to point out that it is a problem in the long run. Something we had to (and still do) live with.

Statistical overview

To get an idea of the size of the project I will indicate some figures.

For the main database:

- Database storage size: about 335G

- Main server: 1

- Replicating servers: 2

- Base Tables: 181

- Tables as views: 77

- Columns: 2475

- Routines:

- Functions: 13

- Procedures: 427

- Triggers: 165

- SQL source lines of code used during upgrade: 26305 lines, of which

- 3913 blank and

- 946 comments

- Clojure source lines of code used during upgrade: 1031 lines of which

- 226 blank and

- 104 comments

Over the period of the project, one of the JIRA placeholders had about ninety comment entries to capture updates and observations.

Resources

In the beginning manual exploration of the database was done as I learned what to look for before the upgrade could begin. There is a guide compiled and mentioned in many posts online, which helps to do the preparation. There is also upgrade check software that cross-examines and inspect the target database and then it gives a report of everything that is wrong or could be a problem.

After this later started to become a big project, I realized that tools were going to be needed. Because of the critical importance of the process, I started to write a utility program in a language or technology I trust with my life - Clojure. I did it with the Babashka dialect because it already contains most of the software libraries I need. Yes, I know it was a unique choice, but as I say, I trust it with my life. And I had challenges that I felt would be much more difficult in other languages and technologies.

The concept is that I started doing specialized comparisons between production and test databases and it got pretty complicated. I want to connect to both databases at once and compare the same type of data coming from both, without switching between them all the time.

There are probably stand-alone programs that can do this, but I wasn't aware of it, and it meant that I would have to focus my attention elsewhere first to learning the new software, and then I still woudln't know if I trust it.

Try and try again

Early on it became clear that there were going to be many challenges with so much that can go wrong or data which can become corrupted and that would be painful. The pressure was heavy on my shoulders, simply because it is so important to get it right.

Due to the volume of the data, this involved a very time-consuming process. I don't mean an hour or two. It took days. Closer to the end, the process of start to finish took about two to three days if absolutely everything fell into place perfectly. It helped to use larger compute instances, but costs also had to stay in check.

Timelines and surprises

By December 2023, we have transitioned to the User Acceptance Testing (UAT) environment from MySQL 5.7.x to MySQL 8.0.28 and was excited to be close to the point of switching production too! Initial tests in the test environment have been promising and we started to prepare the new production replication instances. Of course it had to happen that the extended support period is getting closer very quickly... In January 2024, we had to start determining where we would end up, and whether it will become a problem: AWS is going to start charging extra rates for extended support, and we don't know if the switch will go smoothly!

To keep everything operational we had to upgrade to MySQL 5.7.44 so that an automatic upgrade to 8.0.x would not be attempted. This caused stakeholders to start shuffling around awkwardly because we would need several hours of downtime to do that particular upgrade. But we did it and with the version at 5.7.44 it was a safety net that could give us time to make sure that the preparation for 8.0.28 is done as well as possible.

Even closer to the point of switching, we started doing homework on when the next upgrade will be suggested from AWS's side, and oh my, 8.0.28 was about to be deprecated early 2024! Along with this news, we also found out that there will be a new challenge.

Microsoft .Net

Some of the older parts in the platform are written in C# and run on Windows with .Net. For Java there is MySQL Connector/J, and for .Net there is Connector/NET. Windows and .Net are completely out of my comfort zone and I have tried to understand as much as I can. But with all the other stuff already in my head I just couldn't make a paradigm shift to ALSO master .Net. Fortunately, we were able to prompt an ex-colleague to help and he agreed to inspect the .Net part.

There was a plan to upgrade the .Net platforms after the database upgrade was completed. It reached a point where it became clear that more issues would be resolved by upgrading the Windows servers with the .Net platform before we attempted to proceed with the database upgrade.

The big reason for this particular Connector/NET upgrade was because the older .Net couldn't talk to MySQL 8.0.36, and that's a big problem. Furthermore, there is also a specific part where the .Net part had to be able to communicate to both 5.7.44 and 8.0.36 databases. It was non-negotiable.

At this point, so many detours have been taken that it is difficult to keep up with everything that has happened, and what is yet to happen. But we continue. One of the first servers that George set up in 2016 through ClickOps was then replaced with CloudFormation. I am proud of replacing something that was first set up by a mentor. We have come a long way in learning how to do things the proper way. I have learned plenty, and yet there's more to learn.

The Rubicon

The last few days before D-day were full of preparation, planning, checking, settings, adjustments, checking and chatting to make sure everything was ready and in order. There were tasks that we could do ahead of time, so that less had to happen at once or on the day, and I'm glad we did it that way instead!

The day of the switch was grueling. So many points to check again and to keep up with the correct processes of the day. The switchover took us about four hours, and everything went well for the most part. There were snags that took us further into the rest of the day, but they were less critical and luckily an emergency roll back was not necessary. So much care, focus and hard work had been applied, that such a setback would drive me insane!

There are still rough patches to be sorted out, but it was successful, and the customer no longer has to pay extended support rates to AWS.

In closing

This was certainly a formidable challenge, but through it I learned a lot in various areas. Old cobwebs were swept away and the old shelves in my memory were dusted off! AWS RDS really makes it much easier to have peace of mind about the data being stored.

With the volume of data that had to be managed, this would have been a very difficult task on local servers.

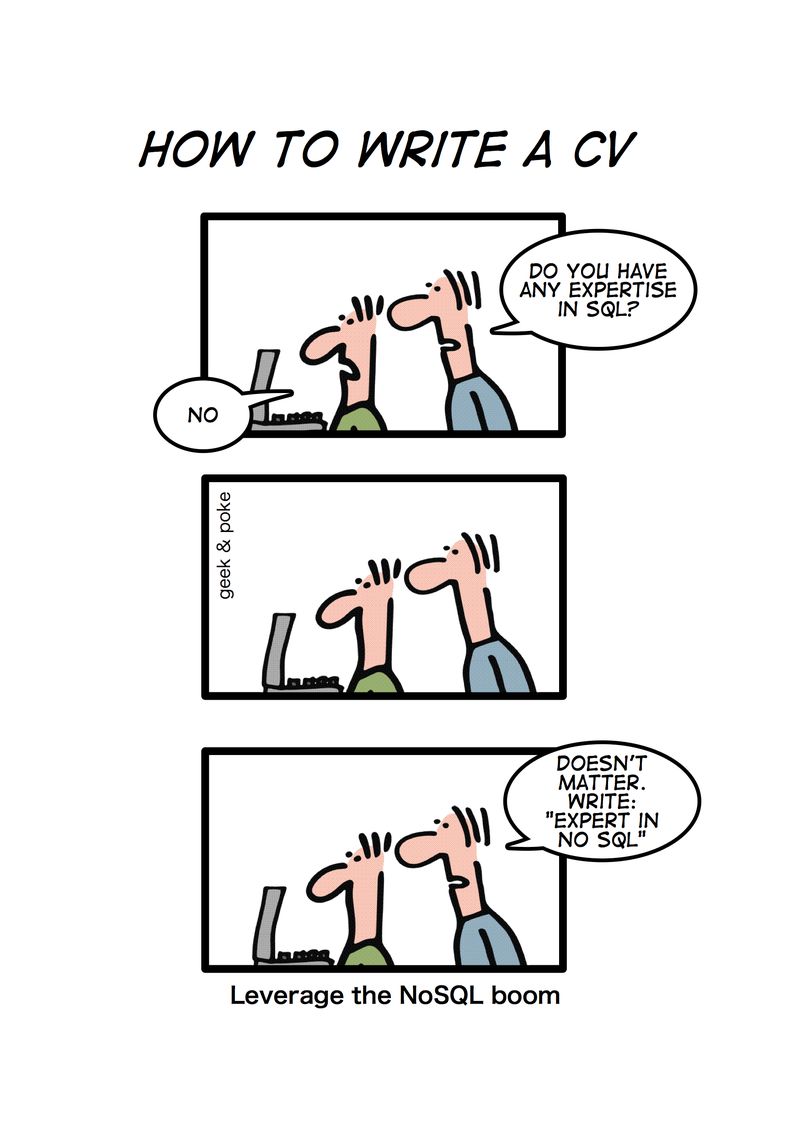

Leverage the NoSQL

I was reading up on Datalog and Datomic and this gem crossed my path: